- Danny Driess1,2

- Fei Xia1

- Mehdi S. M. Sajjadi3

- Corey Lynch1

- Aakanksha Chowdhery3

- Brian Ichter1

- Ayzaan Wahid1

- Jonathan Tompson1

- Quan Vuong1

- Tianhe Yu1

- Wenlong Huang1

- Yevgen Chebotar1

- Pierre Sermanet1

- Daniel Duckworth3

- Sergey Levine1

- Vincent Vanhoucke1

- Karol Hausman1

- Marc Toussaint2

- Klaus Greff3

- Andy Zeng1

- Igor Mordatch3

- Pete Florence1

1

2

2

3

3

Abstract

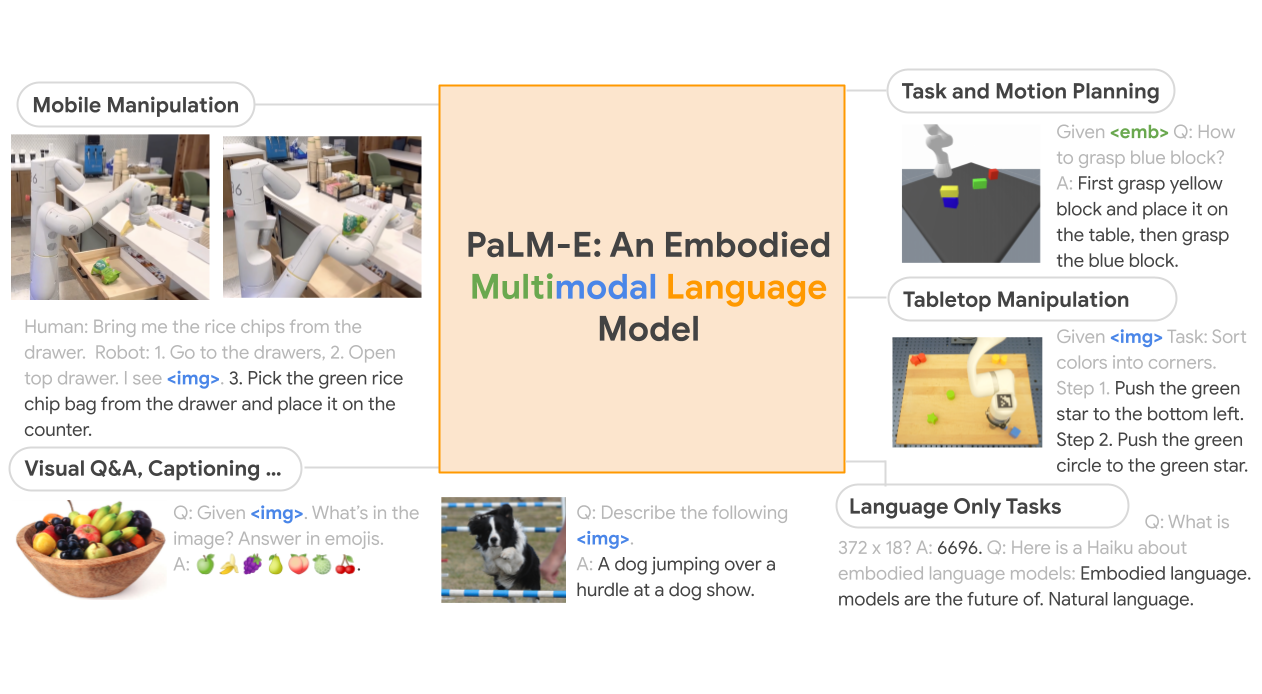

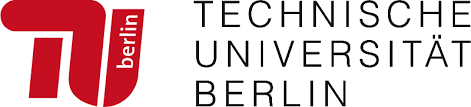

Large language models have been demonstrated to perform complex tasks. However, enabling general inference in the real world, e.g. for robotics problems, raises the challenge of grounding. We propose embodied language models to directly incorporate real-world continuous sensor modalities into language models and thereby establish the link between words and percepts. Input to our embodied language model are multi-modal sentences that interleave visual, continuous state estimation, and textual input encodings. We train these encodings end-to-end, in conjunction with a pre-trained large language model, for multiple embodied tasks, including sequential robotic manipulation planning, visual question answering, and captioning. Our evaluations show that PaLM-E, a single large embodied multimodal model, can address a variety of embodied reasoning tasks, from a variety of observation modalities, on multiple embodiments, and further, exhibits positive transfer: the model benefits from diverse joint training across internet-scale language, vision, and visual-language domains. Our largest model, PaLM-E-562B with 562B parameters, in addition to being trained on robotics tasks, is a visual-language generalist with state-of-the-art performance on OK-VQA, and retains generalist language capabilities with increasing scale.

Approach

The main architectural idea of PaLM-E is to inject continuous, embodied observations such as images, state estimates, or other sensor modalities into the language embedding space of a pre-trained language model. This is realized by encoding the continuous observations into a sequence of vectors with the same dimension as the embedding space of the language tokens. The continuous information is hence injected into the language model in an analogous way to language tokens. PaLM-E is a decoder-only LLM that generates textual completions autoregressively given a prefix or prompt. We call our model PaLM-E, since we use PaLM (Chowdhery et al., 2022) as the pre-trained language model, and make it Embodied.

Results

We show a few example videos showing how PaLM-E can be used to plan and execute long horizon tasks on two different real embodiments. Please note, that all of these results were obtained using the same model trained on all data. In the first video, we execute a long-horizon instruction "bring me the rice chips from the drawer" that includes multiple planning steps as well as incorporating visual feedback from the robot's camera. Finally, show another example on the same robot where the instruction is "bring me a green star". Green star is an object that this robot wasn't directly exposed to.

In the following part, we show PaLM-E controlling a table top robot arranging blocks. We show the PaLM-E can successfully plan over multiple stages based on visual and language input. Our model is able to successfully plan a long-horizon task "sort blocks by colors into different corners" . Another example of planning over multiple stages and incorporating visual feedback over long time horizons. Finally, we demonstrate another example of long-horizon pushing tasks on this robot. The first instruction is "move remaining blocks to the group". PaLM-E sequences step-by-step commands to the low-level policy such as "move the yellow hexagon to the green star", and "move the blue triangle to the group".

Next, we demonstrate two examples of generalization. In the case below the instruction is "push red blocks to the coffee cup". The dataset contains only three demonstrations with the coffee cup in them, and none of them included red blocks. We show another generalization example, where the instruction is "push green blocks to the turtle". The robot is able to successfully execute this task even though it has never seen the turtle before.

In addition to unlocking new capabilities in robot planning. PaLM-E is a competent Vision-Language Model. Please check out our paper for more details and see the dmeo below.

Demo

The examples below are all example completions (in orange) from PaLM-E. The prompt is the one or more images and the text in gray.

![Given <b>img</b>. Q: Who are the two teams playing in this photo, and which was the last to win a championship, and which year did they win, and who was their star player that year? A: Let's think step by step. [sep] 1. team in white is new york knick. 2. team in green is boston celtic. 3. last to win championship was boston celtic. 4. year was 2008. 5. star player was paul pierce. 6. i am not sure if this is correct. i am not basketball fan. i just google it.](img/demo_img/knicks_celtics.jpg)

![Given <b>img</b>. Q: What flavor is the pink doughnut on the right? A:[sep] strawberry.. Q: What flavor is the doughnut on the left? A blueberry.](img/demo_img/donut.png)

![Given <b>img</b>. Q: What will the robot do next? A: [sep] fall.](img/demo_img/darpa-robot.jpg)

![Robot: I am a robot operating in a kitchen. Given <b>img</b>, When a human asks me to do a task, I will respond with the sequence of actions I would do to accomplish the task with only the items I see. Human: Use all of the ingredients you see to make a cake batter. [sep]1. crack egg. 2. put egg in bowl. 3. put flour in bowl. 4. put sugar in bowl. 5. mix. 6. put in pan. 7. bake. 8. eat. 9. clean up.](img/demo_img/cake.jpg)

![Given <b>img</b>. Q: I am getting just two custom pizza for me and my friend. How much should I pay in total? A: Let's think step by step. [sep] 1st step: 2 custom pizzas. 2nd step: 2 x $9.99. 3rd step: $19.98.](img/demo_img/menu2.jpg)

![Given <b>img</b>. Q: What's in the image? Answer in emojis. A: [sep]🍏🍌🍇🍐🍑🍈🍒.](img/demo_img/fruits.jpg)

![Given <b>img</b>. Q: If a robot wanted to be useful here, what steps should it take? A: [sep]clean table..pick up trash.. pick up chairs.. wipe chairs.. put chairs down.](img/demo_img/cleaning.jpg)

![Given <b>img</b>. Q: How many championship rings did the player on the left win in his career A: Let’s think step by step. [sep] 1. kobe bryant is on the left. 2. kobe bryant has won 5 championship rings.](img/demo_img/kobe.jpg)

![Given <b>img</b>. Q: Can I go down this street on a bicycle, yes or no? A: Let's think step by step. [sep] 1. do not enter. 2. except bicycles. 3. do not enter except bicycles. 4. yes.](img/demo_img/bike.png)

![I see (image omitted). Description: A cow sitting on a rug. Joke: Why is the cow sitting on a rug? Because it wants to be a cow-ch!I see <b>img</b>.[sep] Description: a donkey is carrying a dog, cat, and rooster. Joke: what do you call a donkey with a rooster on his back. A rooster booster.](img/demo_img/donkey.jpg)

![Photo 1: <b>img1</b>. Photo 2: <b>img2</b>. Q: What is in Photo 1 but NOT in Photo 2? A: Let's think step by step and explain.[sep] Photo 1 has sunglasses on top of folded clothes. Photo 2 does not have sunglasses on top of folded clothes. Therefore, sunglasses are in Photo 1 but not in Photo 2.](img/demo_img/compare.png)

![Photo 1, at 10:30 am: <b>img1</b>. Photo 2, at 12:45 pm: <b>img2</b>. Photo 3, at 3:45 pm: <b>img3</b>. Q: I forget, what did I have for lunch, and what time was it? A: Let's think step by step. [sep]1. you had a sandwich for lunch. 2. it was 12:45 pm.](img/demo_img/diary.png)

PaLM-E response in orange shade.

![Given <b>img</b>. Q: What was the task? A:[sep]Prediction: make one vertical line out of the red and blue blocks, then make a vertical line out of the green and yellow blocks](img/demo_img/lt1.png)

![Given <b>img</b>. Q: What might have happend? A:[sep]Prediction: move the green circle to the left of the green star](img/demo_img/lt2.png)

![Robot: I am a robot operating in an office kitchen. When a human asks me to do a task, I will respond with the sequence of actions I would do to accomplish the task. Human: apportez-moi les chips de riz du tiroir. Robot: 1. go to drawers. 2. open top drawer. I see <b>img</b> [sep]3. pick green rice chip bag from top drawer and place on counter.](img/demo_img/bag_chip.png)

![Robot: I am a robot operating in an office kitchen. When a human asks me to do a task, I will respond with the sequence of actions I would do to accomplish the task. Human: bring me an apple. Robot: 1. go to apple. I see <b>img</b> [sep] 2. pick apple. 3. go to robot operator. 4. place apple. 5. done.](img/demo_img/apple.png)

![Robot: I am a robot operating in an office kitchen. When a human asks me to do a task, I will respond with the sequence of actions I would do to accomplish the task. Human: bring me a green star. Robot: 1. go to green star. I see <b>img</b> [sep] 2. pick green star.. 3. go to robot operator.. 4. place green star.. 5. done.](img/demo_img/greenstar.png)

![Given <b>img</b>. Q: How to grasp the red object?[sep]A: First grasp the green object an place it on the table, then grasp the red object.](img/demo_img/damp.jpg)

![Given <b>img</b>. Q: Which block is not currently in the group? A:[sep] red circle.](img/demo_img/lt3.png)

![Given <b>img</b>. Q: Which blocks of the same color are closest together? A:[sep] yellow blocks.](img/demo_img/lt4.png)

![Given <b>img</b>. Q: What was the task? A:[sep]put all the blocks in a horizontal line.](img/demo_img/lt5.png)

![Given <b>img</b>. Q: Which block is missing from the horizontal line? A:[sep] blue triangle.](img/demo_img/lt6.png)

![Given <b>img</b>. Q: How to stack the yellow object on top of the blue plate? [sep] A: First grasp the orange object with the left arm and place it on the table, then grasp yellow object with the left arm and place it on the table, then grasp the yellow object with the right arm and place it on the blue plate.](img/demo_img/biarm.jpg)

![Given <b>img</b>. Q: How to stack the white object on top of the red object? [sep] A: First grasp the red object and place it on the table, then grasp the white object and place if on the red object.](img/demo_img/biarm2.jpg)

Acknowledgements

The authors would like to thank, for their advice, help and support: Xi Chen, Etienne Pot, Sebastian Goodman, Ted Xiao, Keerthana Gopalakrishnan, Kehang Han, Henryk Michalewski, Neil Houlsby, Basil Mustafa, Justin Gilmer, Yonghui Wu, Erica Moreira, Victor Gomes, Tom Duerig, and Kendra Byrne.